AI is transforming engineering in nearly every industry and application area but particularly mining. With that, comes requirements for highly accurate AI models. Indeed, AI models can often be more accurate as they replace traditional methods, yet this can sometimes come at a price: how is this complex AI model making decisions, and how can we, as mining engineers, verify the results are working as expected?

Enter explainable AI - a set of tools and techniques that help us to understand model decisions and uncover problems with black-box models like bias or susceptibility to adversarial attacks. Explainability can help those in mining working with AI to understand how machine learning models arrive at predictions, which can be as simple as understanding which features drive model decisions but more difficult when trying to explain complex models.

Evolution of AI models

Why the push for explainable AI? Models weren't always this complex. In fact, let's start with a simple example of a thermostat in winter. The rule-based model is as follows:

- Turn on heater below 22 degrees.

- Turn off heater above 25 degrees.

Is the thermostat working as expected? The variables are current room temperature and whether the heater is working, so it is very easy to verify based on the temperature in the room.

Certain models, such as temperature control, are inherently explainable due to either the simplicity of the problem, or an inherent, "common sense" understanding of the physical relationships. In general, for applications where black-box models aren't acceptable, using simple models that are inherently explainable may work and be accepted as valid if they are sufficiently accurate.

However, moving to more advanced models has advantages:

- Accuracy: In many scenarios, complex models lead to more accurate results. Sometimes results may not be immediately obvious but can get to answers faster.

- Working with more sophisticated data: Mining engineers may need to work with complex data like streaming signals and images that can be used directly in AI models, saving significant time in model building.

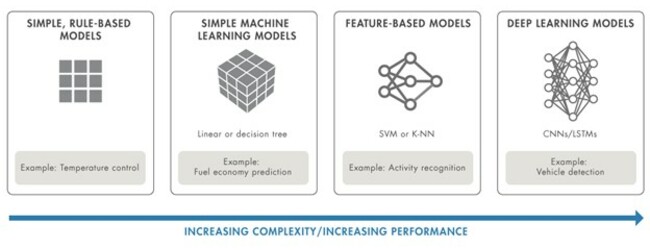

- Complex application spaces: The complexity of applications is growing and new research is exploring additional areas where deep learning techniques can replace traditional techniques like feature extraction Figure 1: Evolution of AI models. A simple model may be more transparent, while a more sophisticated model can improve performance.

Figure 1: Evolution of AI models. A simple model may be more transparent, while a more sophisticated model can improve performance.

Why Explainability?

AI models are often referred to as "black-boxes," with no visibility into what the model learned during training, or how to determine whether the model will work as expected in unknown conditions. The focus on explainable models aims to ask questions about the model to uncover any unknowns and explain their predictions, decisions, and actions.

Complexity vs. Explainability

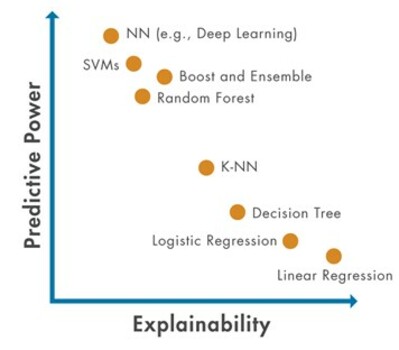

For all the positives about moving to more complex models, the ability to understand what is happening inside the model becomes increasingly challenging. Therefore, mining engineers need to arrive at new approaches to make sure they can maintain confidence in the models as predictive power increases.

Figure 2: The tradeoff between explainablility and predictive power. In general, more powerful models tend to be less explainable, and engineers will need new approaches to explainability to make sure they can maintain confidence in the models as predictive power increases.

Using explainable models can provide the most insight without adding extra steps to the process. For example, using decision trees or linear weights can provide exact evidence as to why the model chose a particular result.

Engineers who require more insight into their data and models and are driving explainability research for:

-

Confidence in models: Many stakeholders are interested in the ability to explain a model based on its role and interaction with the application. For example:

- A decision maker wants to trust and understand how the AI model works.

- A customer wants to feel confident the application will work as expected in all scenarios and the behavior of the system is rational and relatable.

- A model developer wants insight into model behavior and improvements that can be made to the accuracy by understanding why a model is making certain decisions.

- Regulatory requirements: There is an increasing desire to use AI models in safety-critical and governance and compliance applications that may have internal and external regulatory requirements. Although each industry will have specific requirements, providing evidence of robustness of training, fairness, and trustworthiness may be important.

- Identifying bias: Bias can be introduced when models are trained on data that is skewed or unevenly sampled. Bias is especially concerning for models applied to people. It's important for model developers to understand how bias could implicitly sway results, and to account for it so AI models "generalise" - provide accurate predictions without implicitly favouring groups and subsets.

Debugging models: For engineers working on models, explainability can help analyse incorrect model predictions. This can include looking into issues within the model or the data. A few specific explainability techniques which can help with debugging are described in the following section.

Current Explainability Methods

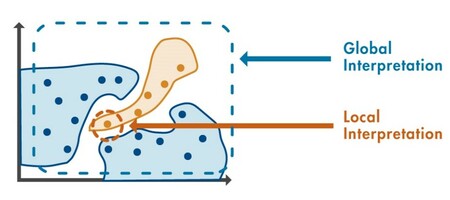

Explainable methods fall into two categories:

- Global methods provide an overview of the most influential variables in the model based on input data and predicted output.

- Local methods provide an explanation of a single prediction result.

Figure 3: The difference between global and local methods. Local methods focus on a single prediction, while global methods focus on multiple predictions.

Understanding feature influence

Global methods include feature ranking, which sorts features by their impact on model predictions, and partial dependence plots, which home in on one specific feature and indicate its impact on model predictions across the whole range of its values.

The most popular local methods are:

- LIME for machine and deep learning: Local Interpretable, Model-agnostic Explanation (LIME) can be used in both traditional machine learning and deep neural network debugging. The idea is to approximate a complex model with a simple, explainable model in the vicinity of a point of interest, and thus determine which of the predictors most influenced the decision.

- Shapely values: The Shapley value of a feature for a query point explains the deviation of the prediction from the average prediction, due to the feature. Use the Shapley values to explain the contribution of individual features to a prediction at the specified query point.

Visualisations

When building models for image processing or computer vision applications, visualisations are one of the best ways to assess model explainability.

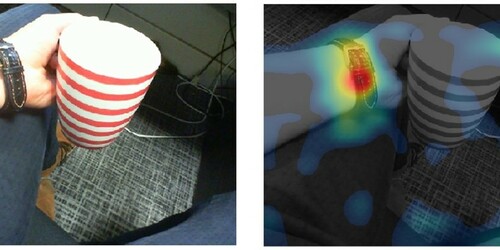

Model visualisations: Local methods like Grad-CAM and occlusion sensitivity can identify locations in images and text that most strongly influenced the prediction of the model.

Figure 4: Visualisations that provide insight into the incorrect prediction of the network.

Feature comparisons and groupings: The global method T-SNE is one example of using feature groupings to understand relationships between categories. T-SNE does a good job of showing high-dimensional data in a simple two-dimensional plot. These are only a few of the many techniques currently available to help model developers with explainability. Regardless of the details of the algorithm, the goal is the same: to help mining engineers gain a deeper understanding about the data and model. When used during AI modeling and testing, these techniques can provide more insight and confidence into AI predictions.

Beyond Explainability

Explainability helps overcome an important drawback of many advanced AI models and their black-box nature. But overcoming stakeholder or regulatory resistance against black-box models is only one step towards confidently using AI in engineered systems. AI used in practice requires models that can be understood, that were constructed using a rigorous process, and that can operate at a level necessary for safety-critical and sensitive applications in mining. Continuing areas of focus and improvement include:

- Verification and validation: An area of ongoing research is V&V which moves explainability beyond confidence and proof that a model works under certain conditions, but instead focuses on models used in safety-critical applications that must meet minimum standards.

- Safety certification: Industries such as automotive and aerospace are defining what safety certification of AI looks like for their applications. Traditional approaches replaced or enhanced with AI must meet the same standards and will be successful only by proving outcomes and showing interpretable results.

- More transparent models: The output of the system must match the expectations of the persona. It's something an engineer must consider from the beginning: how will I share my results with the end user?

Is Explainability Right for Your Application?

The future of AI will have a strong emphasis on explainability. As AI is incorporated into safety-critical and everyday applications, scrutiny from both internal stakeholders and external users is likely to increase. Viewing explainability as essential benefits everyone. Mining engineers have better information to use to debug their models to ensure the output matches their intuition. They gain more insight to meet requirements and standards. And, they're able to focus on increased transparency for systems that keep getting more complex.

For more information on how AI can improve your mining business, visit https://www.mathworks.com/solutions/mining.html.

ABOUT THIS COMPANY

MathWorks

MathWorks is the leading developer of mathematical computing software. Engineers globally use Mathworks for innovation and development.

HEAD OFFICE:

- Level 6, Tower 2, 475 Victoria Avenue, Sydney (Chatswood), NSW 2067

- Telephone: +61-2-8669-4700

- Web: au.mathworks.com

- Email: MarketingAU@mathworks.com.au